Cooperative Exploration for

Multi-Agent Deep Reinforcement Learning

University of Illinois at Urbana-Champaign (UIUC)

International Conference on Machine Learning (ICML), 2021

Abstract

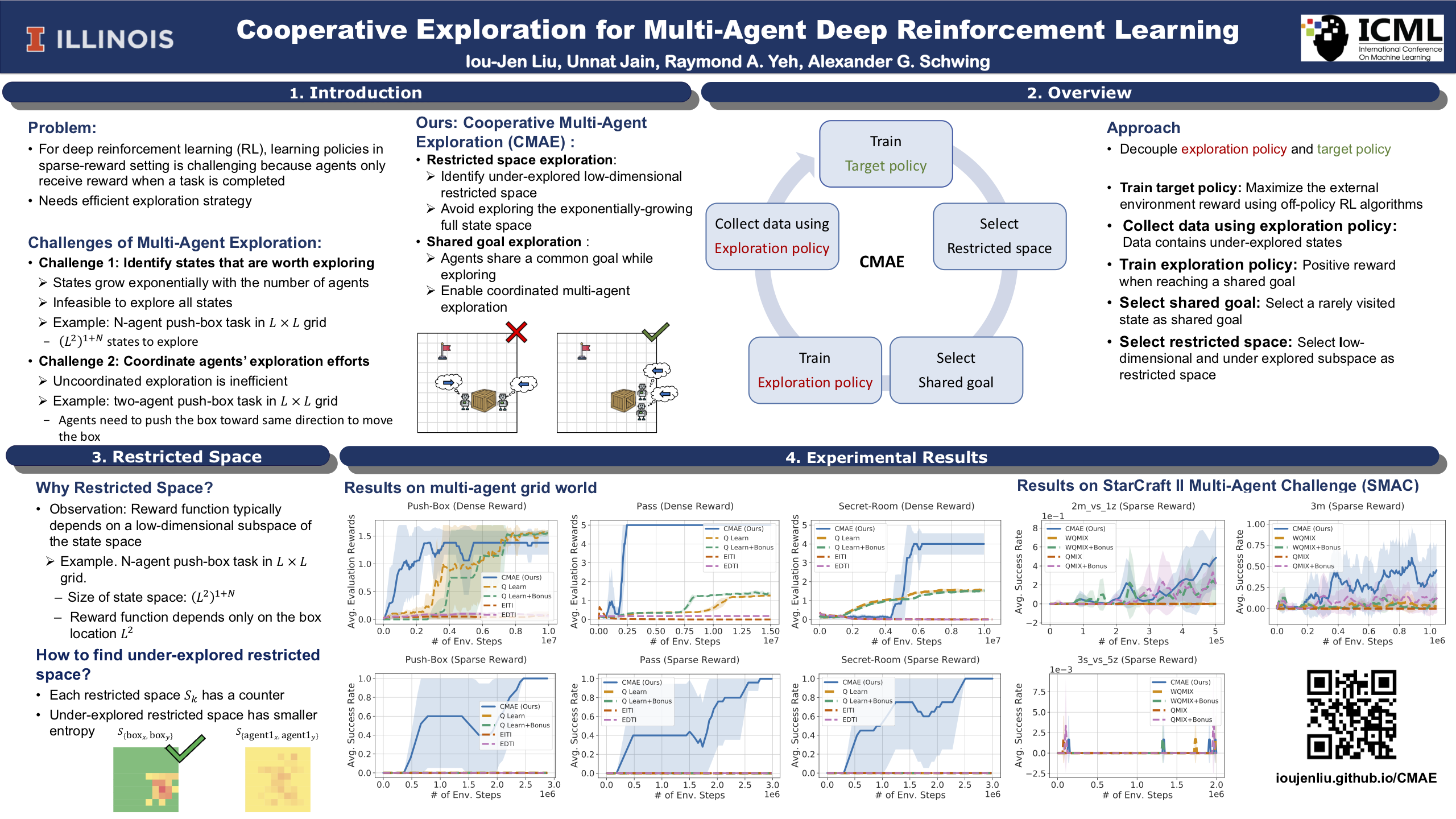

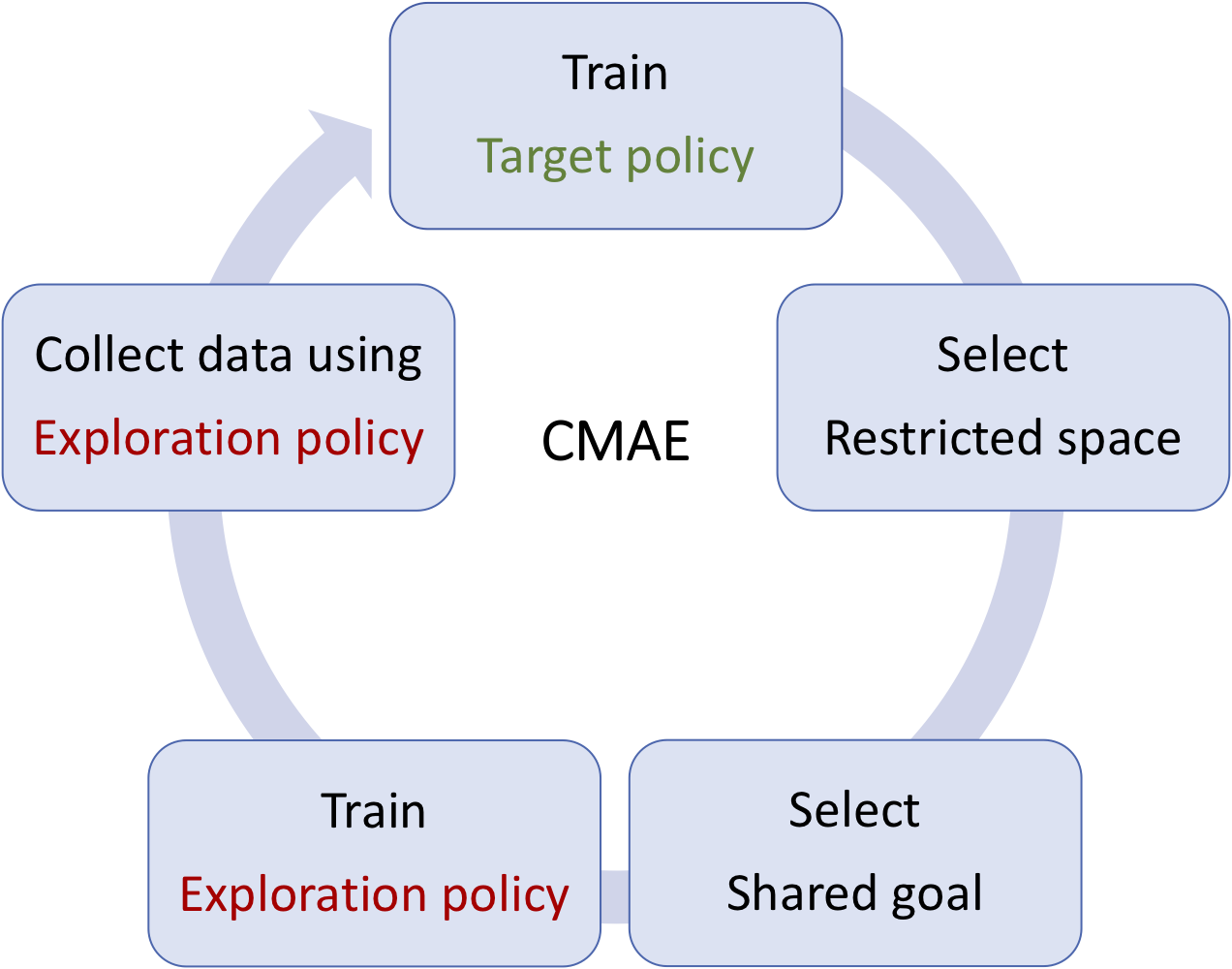

Exploration is critical for good results in deep reinforcement learning and has attracted much attention. However, existing multi-agent deep reinforcement learning algorithms still use mostly noise-based techniques. Very recently, exploration methods that consider cooperation among multiple agents have been developed. However, existing methods suffer from a common challenge: agents struggle to identify states that are worth exploring, and hardly coordinate exploration efforts toward those states. To address this shortcoming, in this paper, we propose cooperative multi-agent exploration (CMAE): agents share a common goal while exploring. The goal is selected from multiple projected state spaces via a normalized entropy-based technique. Then, agents are trained to reach this goal in a coordinated manner. We demonstrate that CMAE consistently outperforms baselines on various tasks, including a sparse-reward version of the multiple-particle environment (MPE) and the Starcraft multi-agent challenge (SMAC).

Materials

Presentation

Citation

@inproceedings{LiuICML2021,

author = {I.-J. Liu and U. Jain and R.~A. Yeh and A.~G. Schwing},

title = {{Cooperative Exploration for Multi-Agent Deep Reinforcement Learning}},

booktitle = {Proc. ICML},

year = {2021},

}

Acknowledgement

This work is supported in part by NSF under Grant #1718221, 2008387, 2045586, and MRI #1725729, UIUC, Samsung, Amazon, 3M, and Cisco Systems Inc. RY is supported by a Google Fellowship.