Asking for Knowledge  :

:

Training RL Agents to Query External Knowledge Using Language

Microsoft Research

University of Illinois at Urbana-Champaign

International Conference on Machine Learning (ICML), 2022

Abstract

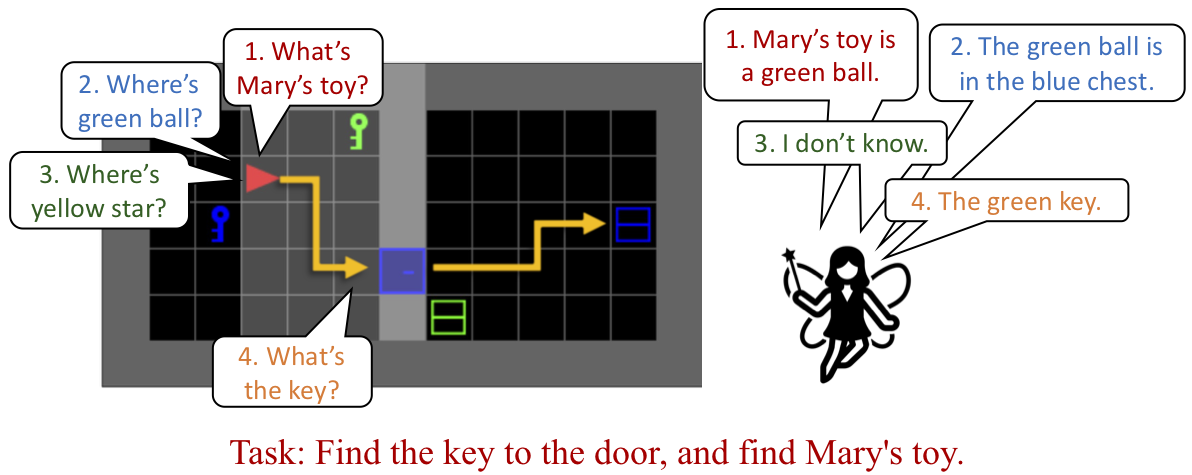

To solve difficult tasks, humans ask questions to acquire knowledge from external sources. In contrast, classical reinforcement learning agents lack such an ability and often resort to exploratory behavior. This is exacerbated as few present-day environments support querying for knowledge. In order to study how agents can be taught to query external knowledge via language, we first introduce two new environments: the grid-world-based Q-BabyAI and the text-based Q-TextWorld. In addition to physical interactions, an agent can query an external knowledge source specialized for these environments to gather information. Second, we propose the "Asking for Knowledge" (AFK) agent, which learns to generate language commands to query for meaningful knowledge that helps solve the tasks. AFK leverages a non-parametric memory, a pointer mechanism and an episodic exploration bonus to tackle (1) a large query language space, (2) irrelevant information, (3) delayed reward for making meaningful queries. Extensive experiments demonstrate that the AFK agent outperforms recent baselines on the challenging Q-BabyAI and Q-TextWorld environments.

Videos: Qualitative results AFK agents on Q-BabyAI tasks

Materials

Citation

@inproceedings{AFK2022,

author = {Iou-Jen Liu$^\ast$ and Xingdi Yuan$^\ast$ and Marc-Alexandre C\^{o}t\'{e}$^\ast$ and Pierre-Yves Oudeyer and Alexander G. Schwing},

title = {Asking for Knowledge: Training RL Agents to Query External Knowledge Using Language},

booktitle = {arXiv.},

year = {2022},

note = {$^\ast$ equal contribution}

}

Acknowledgement

This work is supported in part by Microsoft Research, the National Science Foundation under Grants No. 1718221, 2008387, 2045586, 2106825, MRI #1725729, NIFA award 2020-67021-32799, and AWS Research Awards.